DRAM Cell Growth

To Fail or Not to Fail

I remember a case where DRAMs failed, but the manufacturer couldn’t replicate the failure to identify the source. After a while engineers found that some cells lost their charge when radiation hit them. However, this only happened occasionally when radiation was emitted on chip and hit the memory cells with right incident angle. It took almost a year to trace this behavior back to the wafter production where one manufacturing line used water with a slight radioactive contamination.

But fails don’t always track back to the manufacturing process. DRAMs have also become the target of cyber-attacks. A couple of years ago hackers found out that by activating the same memory rows in DDR3s over and over again, they can leak charges or possibly change the contents of a nearby memory row. This was the first time the high density of cell rows in a memory had been exploited for an attack (row hammer attack). Memory manufacturers had to do quick fixes by lowering wordline resistance and other measures. Final fix in later DRAM designs is to issue extra refresh cycles during such situations preventing the row hammer effect without negatively impacting normal performance or power consumption.

All these are results of extensive testing. While testing has been an important aspect of bringing DRAM to market, these examples show that testing is more important than ever today. The more complex the manufacturing process of DRAM gets and the more specialized its application is, the less you can predict how a DRAM will actually do in the target application without extensive application-specific testing. In addition, increasing clock and data transmission speeds cause more and more signal integrity issues in applications that are not flawlessly designed. As most manufacturers stick to their conventional standard tests, we fill the gap with our new DRAM test board that can simulate actual application environments.

What’s next?

3D DRAM is hailed as the next big thing. But seeing the challenges we are facing with the evolution of memory transistor devices and the extreme requirements for super low leakage DRAM requirements I can hardly see how this will happen any time soon. We’ve been trying to find a stacking solution in the 30 years I have been in the business. Stacking is easier in flash as you are dealing with poly-crystalline silicon and have now low leakage or stringent floating transistor requirements. FLASH principal is simply based on a shift of threshold voltage by trapped charges. In DRAM you need monocrystalline structures with a flawless low leakage behavior and no floating bulk to perfectly switch of the memory cell transistor.so the DRAM can turn off with minimal leakage to hold the charge for 64ms.

But 3D DRAM still inspires engineers to come up with new approaches, and we will see pretty soon if a 3D DRAM will make a breakthrough. Because after all, the past 55 years have shown the boundless innovation power in DRAM.

Author: Dr. Peter Pöchmüller, CEO of Neumonda

This article is also published on the Neumonda website: Happy Birthday DRAM! (neumonda.com)

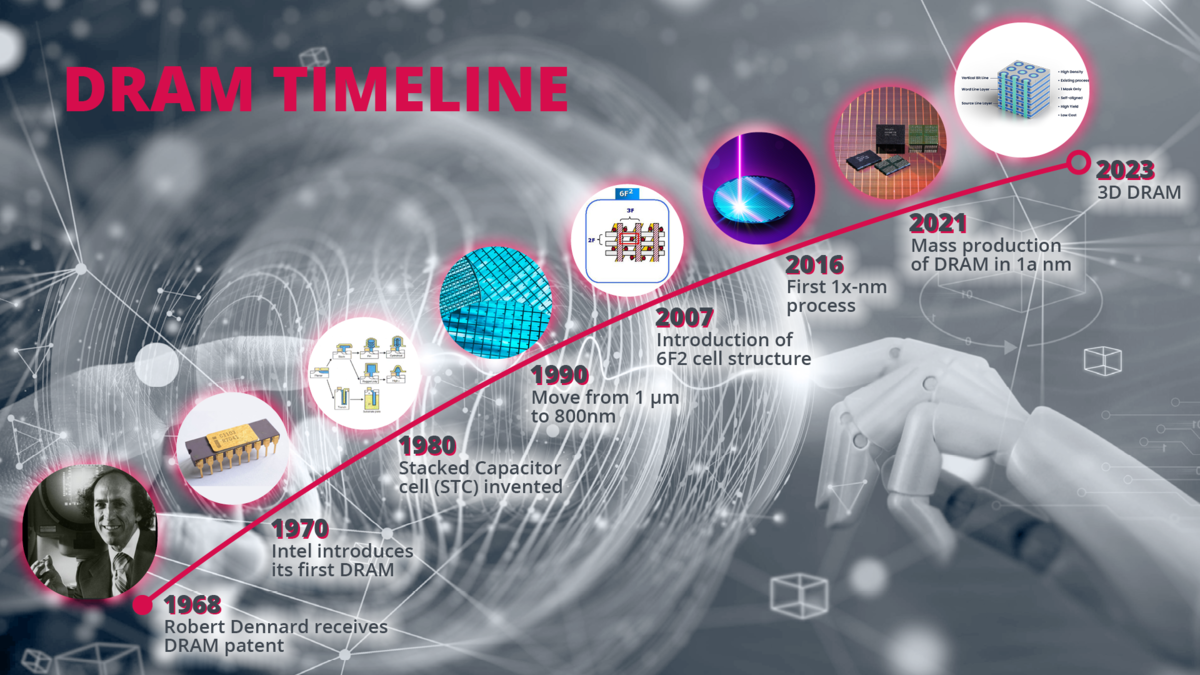

55 Years of Innovation in DRAM